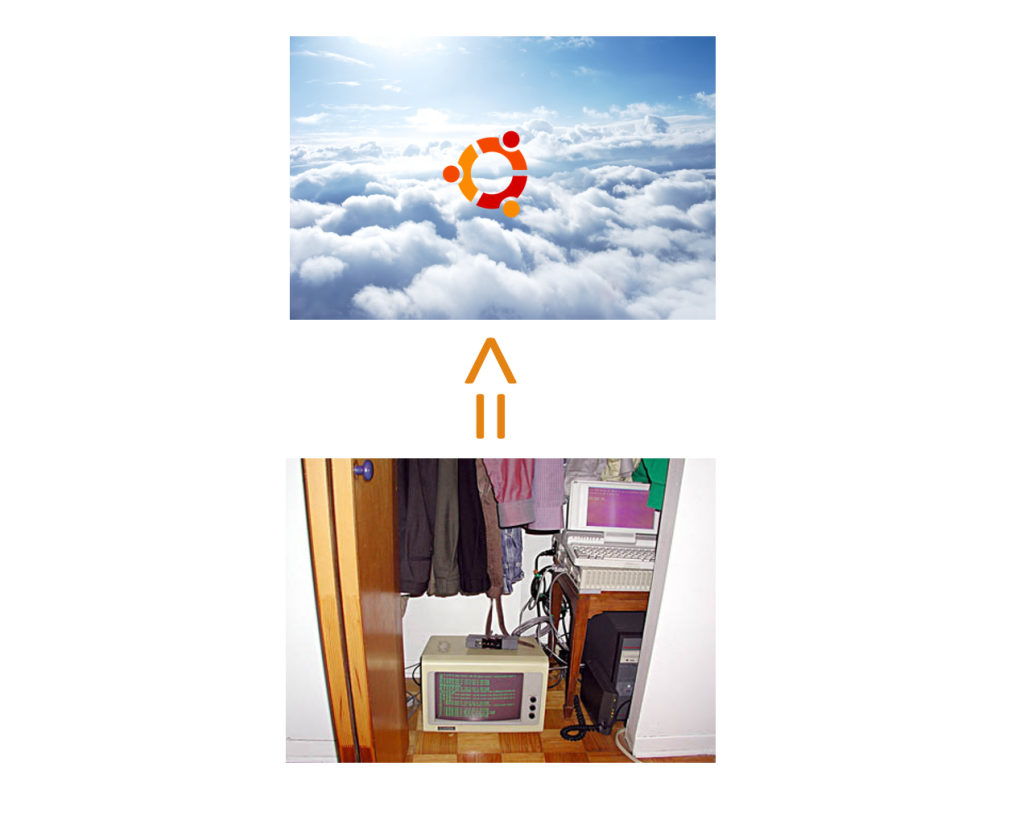

Do you operate servers that run on physical hardware on-premise, in a colocation facility, or a DIY server closet? Do you have a need or interest to bring your systems to the cloud? This presentation walks through the migration of a CentOS 5.X Physical Server to a cloud-hosted Ubuntu 16.04 Virtual Machine. The server in…